Anthony Strock

Working memory in random recurrent neural networks

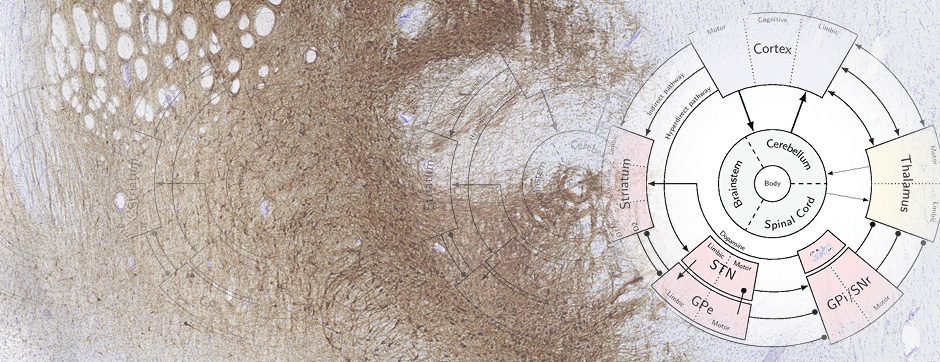

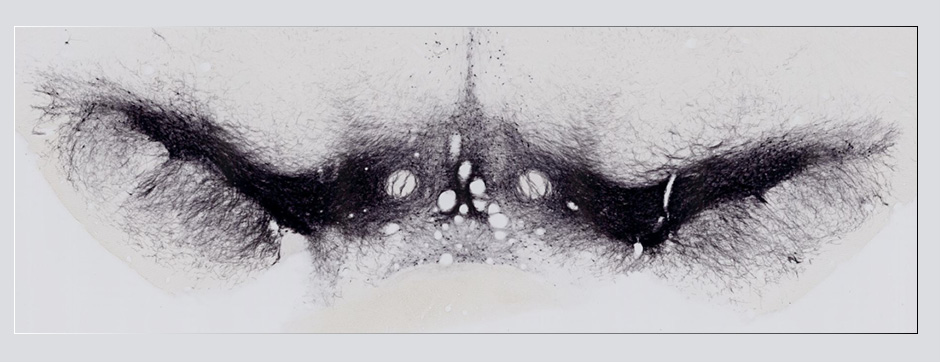

novembre 2020 Directeur(s) de thèse : Xavier Hinaut and Nicolas Rougier Résumé de thèseWorking memory can be defined as the ability to temporarily store and manipulate information of any kind. For example, imagine that you are asked to mentally add a series of numbers. In order to accomplish this task, you need to keep track of the partial sum that needs to be updated every time a new number is given. The working memory is precisely what would make it possible to maintain (i.e. temporarily store) the partial sum and to update it (i.e. manipulate). In this thesis, we propose to explore the neuronal implementations of this working memory using a limited number of hypotheses. To do this, we place ourselves in the general context of recurrent neural networks and we propose to use in particular the reservoir computing paradigm. This type of very simple model nevertheless makes it possible to produce dynamics that learning can take advantage of to solve a given task. In this job, the task to be performed is a gated working memory task. The model receives as input a signal which controls the update of the memory. When the door is closed, the model should maintain its current memory state, while when open, it should update it based on an input. In our approach, this additional input is present at all times, even when there is no update to do. In other words, we require our model to be an open system, i.e. a system which is always disturbed by its inputs but which must nevertheless learn to keep a stable memory. In the first part of this work, we present the architecture of the model and its properties, then we show its robustness through a parameter sensitivity study. This shows that the model is extremely robust for a wide range of parameters. More or less, any random population of neurons can be used to perform gating. Furthermore, after learning, we highlight an interesting property of the model, namely that information can be maintained in a fully distributed manner, i.e. without being correlated to any of the neurons but only to the dynamics of the group. More precisely, working memory is not correlated with the sustained activity of neurons, which has nevertheless been observed for a long time in the literature and recently questioned experimentally. This model confirms these results at the theoretical level. In the second part of this work, we show how these models obtained by learning can be extended in order to manipulate the information which is in the latent space. We therefore propose to consider conceptors which can be conceptualized as a set of synaptic weights which constrain the dynamics of the reservoir and direct it towards particular subspaces; for example subspaces corresponding to the maintenance of a particular value. More generally, we show that these conceptors can not only maintain information, they can also maintain functions. In the case of mental arithmetic mentioned previously, these conceptors then make it possible to remember and apply the operation to be carried out on the various inputs given to the system. These conceptors therefore make it possible to instantiate a procedural working memory in addition to the declarative working memory. We conclude this work by putting this theoretical model into perspective with respect to biology and neurosciences.